Workload Onboarding on Managed Clusters through OpenShift GitOps

Author: Motohiro Abe

Welcome to my technical blog, this my first entry!

In this write-up, I aim to provide a little bit of insights for those interested in leveraging ACM (Application Configuration Management) and GitOps Operator when deploying OCP (OpenShift) clusters, as well as deploying applications onto them. I realized that finding the exact procedures for setting up workloads on spoke clusters as separate applications within the same ArgoCD hub cluster can be a bit tedious. Therefore, I just wanted to share my experiences and shed light on this process.

Precondition

For the purpose of this example, it is assumed that the spoke cluster “sno1” has already been deployed and imported into the ACM. This step is necessary to ensure that the placement rule can be triggered as part of the subsequent process. Additionally, it is assumed that sno1 has been carried out using the siteconfig generator.

Addon-application-Manager

First, it is important to ensure that the managed cluster has the “feature.open-cluster-management.io/addon-application-manager”: “available” label applied. This label indicates that the Application Manager feature is available and enabled on the cluster. To verify the label, you can use the following command:

$ oc get managedcluster sno1 -n open-cluster-management-agent-addon -o jsonpath='{.metadata.labels}'

Replace sno1 with the actual name of your managed cluster. The above command retrieves the labels associated with the managed cluster from the open-cluster-management-agent-addon namespace. Look for the presence of the “feature.open-cluster-management.io/addon-application-manager” label and verify that its value is set to “available”.

If the label is present and set to “available”, it indicates that the Application Manager feature is ready to be utilized on the cluster. If the label is not present or set to a different value, you may need to enable or configure the Application Manager feature on the cluster before proceeding with the next steps.

In case of this label is empty, it can be added through siteconfig.yaml with KlusterletAddonConfigOverride.yaml.

To update the siteconfig.yaml file and override the klusterletAddonConfig with a separate YAML file, follow these steps:

- Add the following lines to the siteconfig.yaml file

crTemplates:

KlusterletAddonConfig: KlusterletAddonConfigOverride.yaml

- Next, update or create KlusterletAddonConfigOverride.yaml in the same folder as the siteconfig.yaml. In this file, you will override the configuration for the applicationmanager component.

apiVersion: agent.open-cluster-management.io/v1

kind: KlusterletAddonConfig

metadata:

annotations:

argocd.argoproj.io/sync-wave: "2"

name: "{{ .Cluster.ClusterName }}"

namespace: "{{ .Cluster.ClusterName }}"

spec:

clusterName: "{{ .Cluster.ClusterName }}"

clusterNamespace: "{{ .Cluster.ClusterName }}"

clusterLabels:

cloud: auto-detect

vendor: auto-detect

applicationManager:

enabled: true # enable

certPolicyController:

enabled: false

iamPolicyController:

enabled: false

policyController:

enabled: true

searchCollector:

enabled: false

After updating files and syncing the ArgoCD cluster application, the spoke cluster will be labeled and the agent-addon pod will be spawned, enabling centralized management and application deployment.

$ oc get pod -A | grep add

open-cluster-management-agent-addon application-manager-584f555b87-ll2wq 1/1 Running 0 88s

ManagedClusterSet and binding rules

To create a ManagedClusterSet custom resource (CR), you can use the following example YAML definition:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: ManagedClusterSet

metadata:

name: myworkload

Once you have created the ManagedClusterSet CR, you can associate it with relevant ManagedClusters by adding the appropriate labels to those clusters.

To create a ManagedClusterSetBinding that binds the ManagedClusterSet to the namespace where Red Hat OpenShift GitOps is deployed (typically the openshift-gitops namespace), you can use the following example YAML definition:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: ManagedClusterSetBinding

metadata:

name: myworkload

namespace: openshift-gitops

spec:

clusterSet: myworkload

This allows you to control which clusters are part of the ManagedClusterSet and can be used for workload placement and management within the GitOps namespace.

To register the managed cluster(s) with the GitOps operator using a Placement custom resource, you can use the following example YAML definition:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Placement

metadata:

name: all-openshift-clusters

namespace: openshift-gitops

spec:

tolerations:

- key: cluster.open-cluster-management.io/unreachable

operator: Exists

- key: cluster.open-cluster-management.io/unavailable

operator: Exists

predicates:

- requiredClusterSelector:

labelSelector:

matchExpressions:

- key: sites

operator: In

values:

- sno1

Finally, create a GitOpsCluster custom resource to register the set of managed clusters from the placement decision to the specified instance of GitOps.

apiVersion: apps.open-cluster-management.io/v1beta1

kind: GitOpsCluster

metadata:

name: gitops-cluster-sample

namespace: openshift-gitops

spec:

argoServer:

argoNamespace: openshift-gitops

cluster: local-cluster

placementRef:

apiVersion: cluster.open-cluster-management.io/v1beta1

kind: Placement

name: all-openshift-clusters

To include the “sno1” cluster in the cluster set using the oc label command, execute the following command:

$ oc label Managedcluster sno1 cluster.open-cluster-management.io/clusterset=myworkload --overwrite

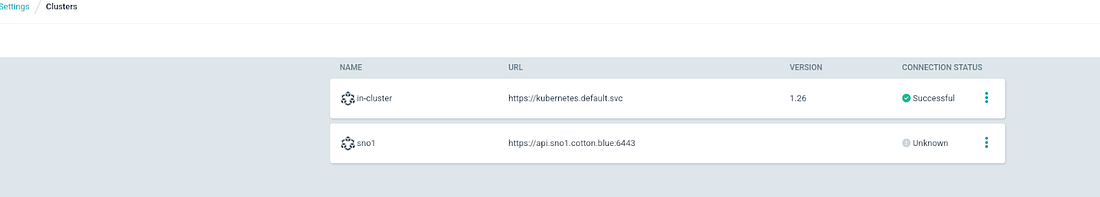

After executing this command, you should observe the following information in ArgoCD.

The connection status for the “sno1” cluster will show as unknown initially since it doesn’t have any applications deployed or being monitored yet.

Conclusion

Great! By defining the placement rules and binding the managed clusters to GitOps, we have laid the foundation for managing and deploying applications in a controlled manner. This setup brings us to achieving a streamlined GitOps workflow for our cluster management and application deployment needs.

References

For more detailed information and specific instructions, I recommend referring to the official product documentation.

Red Hat Advanced Cluster Management for Kubernetes (ACM): Configuring Managed Clusters for OpenShift GitOps operator