Creating OpenShift Clusters with the Assisted-Service API

Author: Brandon B. Jozsa

Red Hat quietly released a new method for installing bare-metal OpenShift clusters via a tool called Assisted-Installer, which is based on the Assisted-Service project. What makes this installer unique is that it greatly reduces the infrastructure requirements for provisioning bare metal (i.e. IPMI or Redfish management, DHCP, web servers, etc). Reduction of these traditional bare-metal provisioning requirements opens up some really interesting opportunities for telco and other provider deployments such as uCPE, RAN, CDN, MEC, and many other Edge and FE types of solutions.

Table of Contents- Part I: Introduction

- Required Links

- Part II: Generating Refresh Tokens

- Part III: Environment Variables

- Part IV: Creating the Cluster

- Part V: Updating the Cluster for CNI

- Part VI: Static IP Addresses

- Part VII: Generate and Download the Installation Media

- Part VIII: Customizing OpenShift Deployments

- Part IX: Start the Installation

Part I: Introduction

So, if the assisted-installer doesn't require any of the traditional bare-metal bootstrap infrastructure, then how does it work? Think of the assisted-installer first in terms of a declarative API, similar to Kubernetes. If you want to make general changes to a cluster or more specifically to each individual host within a given cluster, first you'll need to instruct the API of the changes you want. Each bare-metal node will boot with a custom-configured liveISO and begin to check-in to the API. It will wait in a staged state until it receives instructions from the API. The liveISO works just like any other liveISO that you might already be familiar with, in the fact that it's loaded into memory and the user can initiate low-level machine actions, such as reformatting the disk and installing an OS. The assisted-service builds these liveISOs automatically, based on user instructions (more on this below). The LiveISO includes instructions to securely connect back to the assisted-service API and wait (in a staged-like state) for further instructions; for example, how each member of given cluster should be configured. This includes ignition instructions which have the ability to wipe the disks, deploy the OS, and configure any customizations. This can even include per-node agents (via containers), systemd units, OpenShift customizations, Operators, and even other OpenShift-based applications. The options are really quite limitless, and this allows us to build unique, and incredible solutions for customers.

This brings me to my main point. How can you deploy a customized OpenShift cluster via the Assisted-Installer? Well, let's get into that now.

This blog post will describe how to install the following:

- OpenShift 4.8.9 (as of 2021-09-15)

- Single or Multi-node OpenShift (introduced in 4.8.x)

- Calico as the default CNI (plus instructions on how to enable eBPF)

- All via the Assisted-Installer over REST calls (think automation)

To access the assisted-installer, log into your cloud.redhat.com account. This will work for 60-day evaluations as well. If you have questions, please leave them in the comments below. So let's get started!

Required Links

- Assisted-Service UI- Red Hat Token

Part II: Generating Refresh Tokens

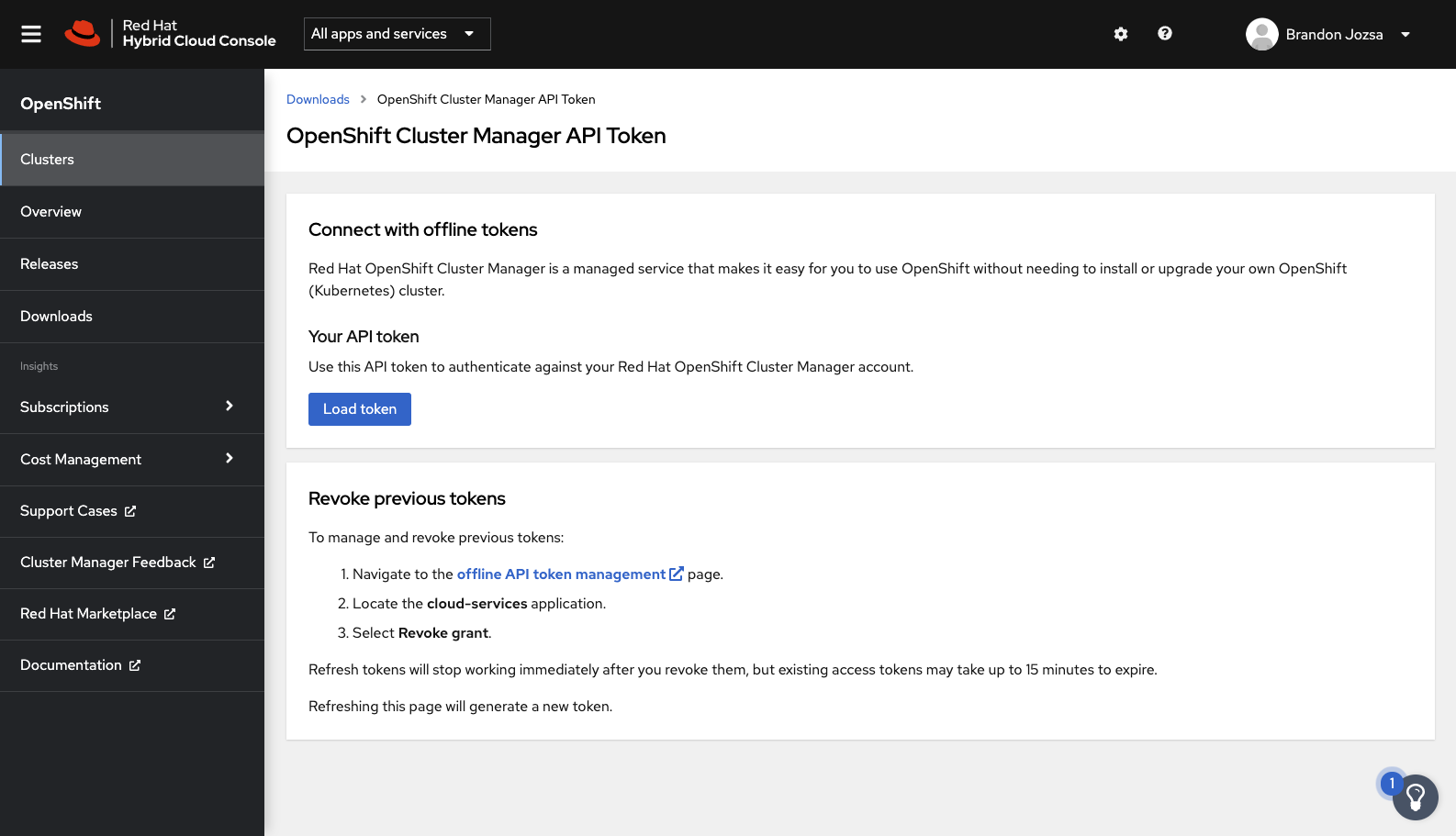

In order to use the Assisted-Service API via cloud.redhat.com, you will need to create a refresh token. This refresh token will last for approximately 5 minutes, but I have created some simple instructions to follow in order to quickly obtain a new refresh token once your old one has expired. But in order to create a refresh token, you will need to leverage Red Hat's OpenShift Cluster Manager API Token which can be used to provide a bearer token.

Once you've logged into the link provided above, you will be presented with a screen like the one shown below. Click on the blue option Load Token to generate an "API token".

Once you have created the API token, use the Copy to clipboard function to provide it as the variable called OFFLINE_ACCESS_TOKEN, which is shown below. From this point forward, you can use this export command to easily generate a new refresh token as the variable TOKEN.

OFFLINE_ACCESS_TOKEN="<PASTE_TOKEN_HERE>"

export TOKEN=$(curl \

--silent \

--data-urlencode "grant_type=refresh_token" \

--data-urlencode "client_id=cloud-services" \

--data-urlencode "refresh_token=${OFFLINE_ACCESS_TOKEN}" \

https://sso.redhat.com/auth/realms/redhat-external/protocol/openid-connect/token | \

jq -r .access_token)

IMPORTANT: If you receive a 400 - Token is expired, please simply rerun the export command above.

VERIFY: After running the export command, verify the TOKEN variable is set by running the following command.

echo ${TOKEN}

Finally, verify that you can correctly talk with the API with the following curl command (install jq before running this command).

curl -s -X GET "https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters" \

-H "accept: application/json" \

-H "Authorization: Bearer $TOKEN" \

| jq -r

If you are able to verify/return a response via the command above, you can continue to the next steps and deploy a cluster with modifications!

Part III: Environment Variables

Next, review the following variables and modify them as needed. These variables will be used throughout the rest of the demonstration and have been test to work:

ASSISTED_SERVICE_API="api.openshift.com"

CLUSTER_VERSION="4.8"

CLUSTER_IMAGE="quay.io/openshift-release-dev/ocp-release:4.8.0-fc.3-x86_64"

CLUSTER_NAME="calico-poc"

CLUSTER_DOMAIN="jinkit.com"

CLUSTER_NET_TYPE="Calico"

CLUSTER_CIDR_NET="10.128.0.0/14"

CLUSTER_CIDR_SVC="172.30.0.0/16"

CLUSTER_HOST_NET="192.168.3.0/24"

CLUSTER_HOST_PFX="23"

CLUSTER_WORKER_HT="Enabled"

CLUSTER_WORKER_COUNT="0"

CLUSTER_MASTER_HT="Enabled"

CLUSTER_MASTER_COUNT="0"

CLUSTER_SSHKEY='ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDE1F7Fz3MGgOzst9h/2+5/pbeqCfFFhLfaS0Iu4Bhsr7RenaTdzVpbT+9WpSrrjdxDK9P3KProPwY2njgItOEgfJO6MnRLE9dQDzOUIQ8caIH7olzxy60dblonP5A82EuVUnZ0IGmAWSzUWsKef793tWjlRxl27eS1Bn8zbiI+m91Q8ypkLYSB9MMxQehupfzNzJpjVfA5dncZ2S7C8TFIPFtwBe9ITEb+w2phWvAE0SRjU3rLXwCOWHT+7NRwkFfhK/moalPGDIyMjATPOJrtKKQtzSdyHeh9WyKOjJu8tXiM/4jFpOYmg/aMJeGrO/9fdxPe+zPismC/FaLuv0OACgJ5b13tIfwD02OfB2J4+qXtTz2geJVirxzkoo/6cKtblcN/JjrYjwhfXR/dTehY59srgmQ5V1hzbUx1e4lMs+yZ78Xrf2QO+7BikKJsy4CDHqvRdcLlpRq1pe3R9oODRdoFZhkKWywFCpi52ioR4CVbc/tCewzMzNSKZ/3P0OItBi5IA5ex23dEVO/Mz1uyPrjgVx/U2N8J6yo9OOzX/Gftv/e3RKwGIUPpqZpzIUH/NOdeTtpoSIaL5t8Ki8d3eZuiLZJY5gan7tKUWDAL0JvJK+EEzs1YziBh91Dx1Yit0YeD+ztq/jOl0S8d0G3Q9BhwklILT6PuBI2nAEOS0Q=='

HINT: All of these instructions are intended to be run from the current directory. Considering this, make sure that the pull-secret.txt and installation are where you want them to be before continuing.

Next, download your pull-secret.txt and save it in the current working directory.

Next, create a variable with the raw contents of your pull-secret.txt file. This is important, because escape characters should be included as part of this output.

PULL_SECRET=$(cat pull-secret.txt | jq -R .)

Now create an Assisted-Service deployment .json file.

cat << EOF > ./deployment.json

{

"kind": "Cluster",

"name": "$CLUSTER_NAME",

"openshift_version": "$CLUSTER_VERSION",

"ocp_release_image": "$CLUSTER_IMAGE",

"base_dns_domain": "$CLUSTER_DOMAIN",

"hyperthreading": "all",

"cluster_network_cidr": "$CLUSTER_CIDR_NET",

"cluster_network_host_prefix": $CLUSTER_HOST_PFX,

"service_network_cidr": "$CLUSTER_CIDR_SVC",

"user_managed_networking": true,

"vip_dhcp_allocation": false,

"host_networks": "$CLUSTER_HOST_NET",

"hosts": [],

"ssh_public_key": "$CLUSTER_SSHKEY",

"pull_secret": $PULL_SECRET

}

EOF

HINT: If you recieve an error that you cannot overwrite the file, then make sure to run setopt clobber in your shell.

HINT: There's a new option in OpenShift v4.8.x that allows you to create a single node OpenStack cluster (referred to as SNO). If this is what you want, then you will need to add the following lines to the ./deployment.json file:

"high_availability_mode": "None"

As an example, a SNO deployment will look like the following output below.

cat << EOF > ./deployment.json

{

"kind": "Cluster",

"name": "$CLUSTER_NAME",

"openshift_version": "$CLUSTER_VERSION",

"ocp_release_image": "$CLUSTER_IMAGE",

"base_dns_domain": "$CLUSTER_DOMAIN",

"hyperthreading": "all",

"cluster_network_cidr": "$CLUSTER_CIDR_NET",

"cluster_network_host_prefix": $CLUSTER_HOST_PFX,

"service_network_cidr": "$CLUSTER_CIDR_SVC",

"user_managed_networking": true,

"vip_dhcp_allocation": false,

"high_availability_mode": "None",

"host_networks": "$CLUSTER_HOST_NET",

"hosts": [],

"ssh_public_key": "$CLUSTER_SSHKEY",

"pull_secret": $PULL_SECRET

}

EOF

Part IV: Creating the Cluster

Now you can create the cluster via the Assisted-Service API with the following command.

curl -s -X POST "https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters" \

-d @./deployment.json \

--header "Content-Type: application/json" \

-H "Authorization: Bearer $TOKEN" \

| jq '.id'

IMPORTANT: the previous command will generate a CLUSTER_ID, which will need to be exported for future use. Export this variable from the output of the previous command, like shown below.

curl -s -X POST "https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters" \

-d @./deployment.json \

--header "Content-Type: application/json" \

-H "Authorization: Bearer $TOKEN" \

| jq '.id'

"0da7cf59-a9fd-4310-a7bc-97fd95442ca1"

CLUSTER_ID="0da7cf59-a9fd-4310-a7bc-97fd95442ca1"

CLUSTER_ID as shown above.Part V: Updating the Cluster for CNI

OpenShift supports two CNI options out of the box. Both have their own benefits and trade-offs, which I will cover in another article. These options include the following.

OpenShiftSDNKubernetesOVNCalico

There are also other third-party CNI options available. A list of third-party network plug-ins can be found on HERE. I have written separate installation articles for Calico and Cilium, specifically related to the Assisted-Installer process. If you wish to modify the CNI, now is the time to do so. Here are a couple of examples below.

If you want to deploy OpenShiftSDN, then use the following curl command (via PATCH).

curl \

--header "Content-Type: application/json" \

--request PATCH \

--data '"{\"networking\":{\"networkType\":\"OpenShiftSDN\"}}"' \

-H "Authorization: Bearer $TOKEN" \

"https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/install-config"

If you would rather deploy KubernetesOVN, then use the following curl command (via PATCH).

curl \

--header "Content-Type: application/json" \

--request PATCH \

--data '"{\"networking\":{\"networkType\":\"KubernetesOVN\"}}"' \

-H "Authorization: Bearer $TOKEN" \

"https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/install-config"

Since both KubernetesOVN and OpenShiftSDN are supported by OpenShift natively, these will install the appropriate CNI automatically. If you chose a third-party option, it's important to note that modifications will be required in order to deploy these for OpenShift. I've written some examples of how to do this for other CNI options.

Now you can review your changes by issuing the following curl request below.

curl -s -X GET \

--header "Content-Type: application/json" \

-H "Authorization: Bearer $TOKEN" \

"https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/install-config" \

| jq -rWe're not entirely complete with our deployment yet, but we're almost there. Let's continue with other potential modifications like static IP addressing in the next section.

Part VI: Static IP Addresses

Static IP addressing for each host can be applied at this time using what's known as an NMState configuration. This is a new option for OpenShift 4.8.x. It allows an administrator to apply static IP addresses to each MAC address defined for a given host. This really requires it's own blog, and I wrote about this process in detail in my other article entitled "Static Networking with Assisted-Installer".

In order to create static IP addresses for your deployment, you will need to run through the following steps, and return to the next section in this article when you are complete.

Perfect! You're almost ready. Now, let's generate the ISO and install the cluster.

Part VII: Generate and Download the Installation Media

IMPORTANT: If you are using static IP addresses for your deployment, skip this section, as you have already created and downloaded the installation media.

Now it's time to generate an ISO (which is your "live" installation media), and download it. Once downloaded, it can be installed on your systems - either virtual or physical. You can read through some of my other articles if you wish to use Redfish for loading the ISO for your virtual or physical systems.

In order to generate and download an ISO, you will have to supply (or resupply) some additional information to the Assisted-Service API. To do this, create another json file, this time calling it iso-params.json. This will be used to generate the deployment ISO.

cat << EOF > ./iso-params.json

{

"ssh_public_key": "$CLUSTER_SSHKEY",

"pull_secret": $PULL_SECRET

}

EOF

Now use the following command to POST a request for Assisted-Service to generate the deployment ISO.

curl -s -X POST "https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/downloads/image" \

-d @iso-params.json \

--header "Content-Type: application/json" \

-H "Authorization: Bearer $TOKEN" \

| jq '.'

Lastly, use curl to download the ISO just generated. This ISO will be used to build the OpenShift cluster, as with any other Assisted-Service deployment.

curl \

-H "Authorization: Bearer $TOKEN" \

-L "http://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/downloads/image" \

-o ai-liveiso-$CLUSTER_ID.iso

Now it is time to boot the bare metal (or virtual) instance from the ISO you've just downloaded.

Part VIII: Customizing OpenShift Deployments

IMPORTANT: If you are using either OpenShiftSDN or KubernetesOVN, and you do not want to deploy any other workloads on top of your OpenShift deployment as part of the initial installation, you can skip this section entirely.

It is at this point where I want to explain how the Assisted-Service works in a bit more detail. You can think of the Assisted-Service as a UPI deployment tool, which has a bunch of various defaults it uses out of the box, and all of this is fronted by an extremely useful API. This is my very poor way of saying that pretty much anything you can do with a UPI deployment, you can also do with the Assisted-Service, so-long as you understand the API and what it's doing under the hood.

Think of the Assisted-Service as working in various phases. One phase is defining the cluster, at a high level. Another phase is configuring the individual IP addresses and mapping them to the appropriate MAC addresses. This needs to be done before the ISO is generated because, obviously, the hosts need to be configured before they can reach back to the API for instructions on what to do.

This leads me to the next phase, which is most relevant to this section. Once the hosts are able to communicate to the Assisted-Service API, you can modify aspects of your deployment by uploading YAML documents to either the manifests or openshift directory. These directories are the same as when an administrator runs the command.

openshift-install create manifests --dir=<installation_directory>With this in mind, now you can run modifications for your customer CNI deployments, and then come back to this article for the final steps.

- Customizations for Calico

- Customizations for Cilium

Part IX: Start the Installation

The final step is to initiate the installation of the cluster through the Assisted-Service API. You can always finalize the deployment through the Red Hat Assisted-Installer WebUI. But for completeness, use the following steps to finalize the installation without having to use the UI.

Using the same variables we defined earlier (at the start of this article) issue the following command to confirm the IP subnet the host machines are attached to. For clarity, this is the subnet which is shared between each of the OpenShift members (the host-level subnet).

curl \

--header "Content-Type: application/json" \

--request PATCH \

--data '"{\"host_networks\":\"'$CLUSTER_HOST_NET'\"}"' \

-H "Authorization: Bearer $TOKEN" \

"https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID"Finally, the moment you've been working so hard for - installation! Run the following command to confirm installation. Once this command has been accepted there cannot be any further modifications to the cluster. It will take approximately 30-45 minutes, depending on the size/specs of your nodes, but run the following command and go grab a coffee - you've earned it!

curl -s -X POST \

--header "Content-Type: application/json" \

-H "Authorization: Bearer $TOKEN" \

"https://$ASSISTED_SERVICE_API/api/assisted-install/v1/clusters/$CLUSTER_ID/actions/install"That's a wrap! If you have any questions, please feel free to reach me on Twitter @v1k0d3n.